This is a follow-up to my earlier blog ‘Nobody Knows Which Schools Are Good‘. I’d recommend reading it first.

It is the 21st of May 2024, the biggest night of the year for state-funded schools in England. Tonight is the night when headteachers find out how they will be judged this summer. The great and the good of the education world gather together in a central London hotel for a televised event. At 7pm, the operators of the National Lottery, Camelot, are invited to the stage with Guinevere, one of their draw machines containing 49 balls. Each ball represents a possible way of measuring school performance, the list of which is assembled by a committee of the Department of Education. Through this committee, politicians, civil servants, trades unions and the inspectorate can all influence the performance measures that comprise the secret list of 49. They cannot influence which six balls Guinevere selects.

The room falls silent as the six balls are drawn and we learn that this summer, schools will be subject to the following assessments:

- An arithmetic fluency test, sat by all students in Years 2, 5 and 8;

- A sample humanities test, where all students currently in years 4 and 9 will be randomised to sit either a history, geography or religious studies paper;

- A pupil survey of wider-curricular activities taken within and beyond normal school hours over the past year;

- A count of teacher turnover in the past three years, excluding retirements of those aged 55 and over;

- A detailed census of the whereabouts of every child who has left the school in the past 12 months; and

- A written submission detailing science practical experiments carried out by each year group this academic year.

What will happen to these assessments when they are completed? We won’t tell you, at least not in advance! The information will be aggregated using rules that vary from year to year. Some years your school’s assessment metrics will include pupils who have long since left the school; other years we might choose to give you a grace period for those who have recently arrived. One year we might ask headteachers to invigilate assessments at neighbouring schools. In another year we might require you to hold all assessments in school halls and gyms.

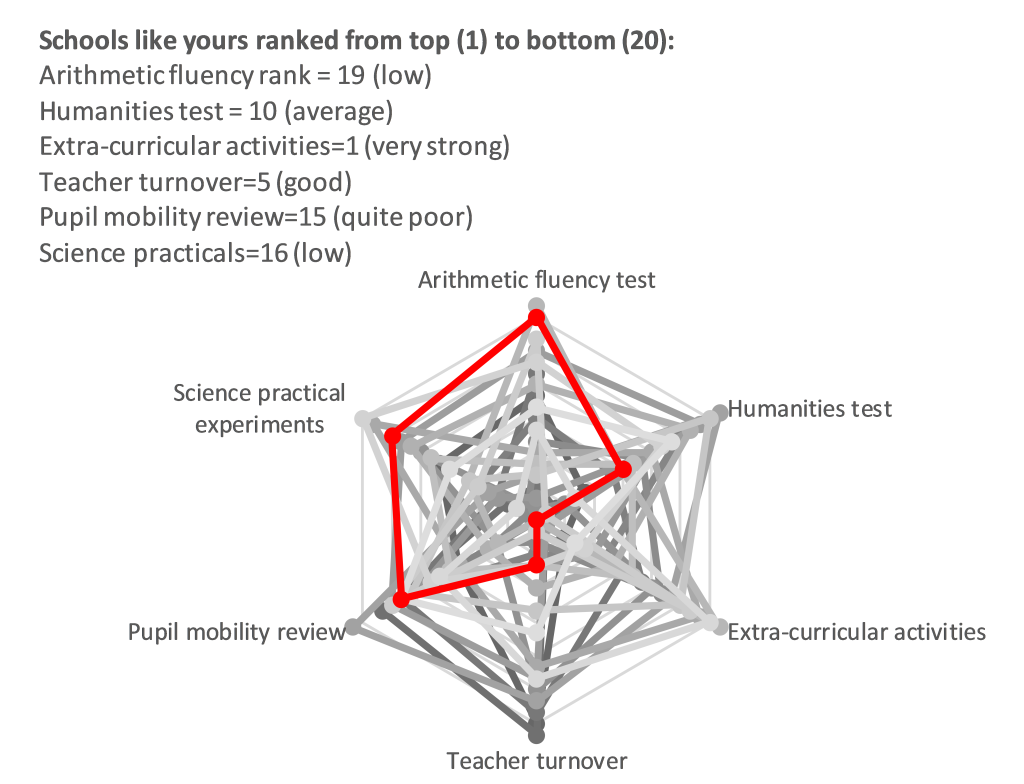

Performance on these assessments could be published (that’s quite a separate debate). We shouldn’t create national rankings of performance on any of these metrics since it is meaningless to describe the ‘quality’ of two schools that serve very different communities for reasons I’ve written about in the past (and summarise here). Instead, I’d let schools see how they compare to the (named) 19 schools who are most similar to them in their demographic circumstances. Where they are weak (e.g. arithmetic in the example below), it gives heads a very clear steer as to who they should visit to learn about effective practice for a school community like theirs.

We must accept that all targets are distortionary

Now, I know what you are thinking… What a strange way to judge schools when we really care whether schools are doing well on broad measures of attainment. Why don’t we reform our accountability metrics one more time to ensure they are perfectly aligned with everything we’d like schools to do? That way, the only route to improve scores is to make schools truly better. Unfortunately, unless we test children for almost as much time as they are at school, it will be impossible for us to create metrics that fully reflect a ‘good’ education. This is because a test can only ever sample a tiny fraction of the domain of knowledge and quality of experiences that we want children to acquire.

Each annual tweak of our published curriculum, our qualifications, our school performance measures is another attempt to create ‘tests worth teaching to’, or targets that truly reflect the qualities of a school we care about. And with each new metric, the system bows towards the thing that we say we’ll measure. Learning that a secondary school scored below -0.5 on Progress 8 might well have been a good method for working out which schools needed support. But the moment it converts from a private rule of thumb, not known by schools, into a target it creates a source of distortion. And it is ludicrous to declare that schools simply should not distort their practice in the ways I described in the previous blog since the metrics only exist to incentivise schools to do as well as they can on them. If we decide to operate an education system where there are consequences for performing poorly on metrics, then distortions are hard to avoid.

We have to stop this infinite cycle of reform. Our system is exhausted by constantly creating new curricula, schemes of work and assessment materials in response to switching metrics because of the inherent complexities in judging a ‘good’ education.

The case for deliberate ambiguity in what we mean by ‘good’

The random drawing of a set of six (or four or 20…) accountability metrics each year might seem unusual, but it is simply an enactment of a new type of accountability for public services, advocated by Prof David Spiegelhalter at the University of Cambridge and Sir Andrew Dilnot. (Prof Dylan Wiliam also proposed a nice sampling approach to assessment in the Bew Review of KS2 SATs and I recommend Tim Harford’s book Messy if you’d like to read more about measurement and accountability of complex organisations.)

We need deliberate ambiguity as to what might asked and how it might be evaluated. The effect of the impossibility of knowing how you will be judged is that it is impossible to game. Our instruction to schools is to teach the National Curriculum and provide a ‘good’ education to the best of their ability. The only way they can hope to be judged as doing well is to work hard at delivering a good education to students that is well-aligned with the cultural values of society. The reason why it is impossible to game is that gaming on one possible metric almost always reduces performance on another.

We want you to teach humanities to Year 9 in line with the intention of the National Curriculum, but if we told you a government assessment would be administered it would distort what else you taught them. We want to ensure that Year 4s have achieved good levels of basic arithmetic fluency, but if we told you a government assessment would be administered it would lead you to neglect other parts of the curriculum. If we don’t know the game, we can’t game it. The only game I’m asking you to play is to teach the National Curriculum to the best of your ability.

Ever-changing metrics generate a framework for experimentation

One nice element of having assessments that drop into and out of the lottery machine is that we can experiment with novel methods of assessment. For example, we’ve been dithering over whether to replace the KS2 writing portfolio with a timed assessment marked using comparative judgement. Under this system, placing something in the basket of potential assessments one year doesn’t mean it has to stay there if we decide it hasn’t adequately captured an aspect of schooling we care about. Similarly, we can experiment with multi-year metrics and sampling of students so that not all take tests if we choose. We can use the existing GCSE exams data, but without pre-specifying how exactly, leaving schools free to create end-point KS4 assessments that prioritise the needs of each individual student.

If I were on the assessment committee, I’d make the case for including some non-attainment metrics, including those that act as early warning signals about the health of our schools. These could include teacher turnover (particularly NQT wastage) and wellbeing, pupil or parental satisfaction, engagement in the wider curriculum, and so on. Equally, I’d make a case that schools should be assessed every so often on how well they’ve supported neighbouring schools, either through neighbourhood performance metrics or surveys of engagement and support.

I might also let politicians have one ‘bonus’ ball, where they pre-announce an assessment will be included for the next three years. Think of these as ‘deliberate distortion’ balls, designed to bend schools in a new curriculum or pedagogic direction. The phonics test and multiplication checks are examples of these – they’re not really there to measure student performance, but rather to send a clear signal about what should be happening in Years 1 and 4. (I don’t think you should leave them in place indefinitely – the presence of phonics is secure in state schools now and I’ve seen too many strong readers over-prepared for a phonics check to the neglect of other key Year 1 curriculum areas such as maths.)

Deliberate ambiguity makes for ‘messy’ regulation

It is unlikely that any regulator or politician would choose to adopt such a system of messy regulation. It makes manipulation of schools by one individual, whether Chief Inspector or Secretary of State, more complex. It would disrupt the comfortable and predictable cycle of data collection and monitoring by civil servants and inspectors. It makes it impossible for politicians to publish metrics showing how things have improved (and they always improve, never decline) under their administration.

The system wouldn’t be popular with newspapers, governors or parents since the ‘quality’ of education at a school would be complex to interpret from one year to the next. This complexity simply reflects the complexity inherent in describing how ‘good’ a school is – we should be more honest with the public about that.

Headteachers I’ve suggested these ideas to tend to be horrified since they are used to systems of control and strategising over how to manufacture school performance! It is certainly true that greater uncertainty will create greater stress, but I like to think this could be counterbalanced by reducing the consequences associated with any particular outcome metric.

I don’t know whether the teaching profession can live under this type of uncertainty, where we won’t tell you how we will assess how well you are delivering the National Curriculum (broadly defined). We won’t tell you what sorts of administration arrangements we’ll be putting in place – around the delivery and collection of scripts, around the monitoring system, around regulation of extra time or eligibility for readers and scribes. We won’t tell you exactly which of the hundreds of students who pass in and out of your school you will held accountable for.

As John Key argues in his book, Obliquity, it is impossible to create rankings of complex constructs such as schools where there is no clear means for resolving how these constructs should be aggregated. We cannot ever end up with a definitive, uni-dimensional scale of schools from the very worst to the very best. And that’s because nobody knows, for sure, which schools are good. We end up with something quite fuzzy from ‘definitely needs some help getting better across a range of areas’ to ‘pretty confident they’ll be fine if left alone’.

None of the data this ungameable game generates will look nice in newspaper league table rankings, but it will, in my view, give us and schools a richer and more honest view of practice across our education system. And, most importantly, it will remove the worst excesses of dysfunctional behaviour on the part of schools. Behaviour that inevitably hits children who are struggling to learn or who come from vulnerable homes most of all.

Pingback: The Market for Schools – Matthew Evans

Pingback: Nobody knows which schools are good – Becky Allen

Pingback: GCSE reform: a modest proposal – David Didau

Pingback: Sitting at home doing nothing? | Faith in Learning